chainsawriot

Home | About | ArchiveOn the idea of executable research compendium

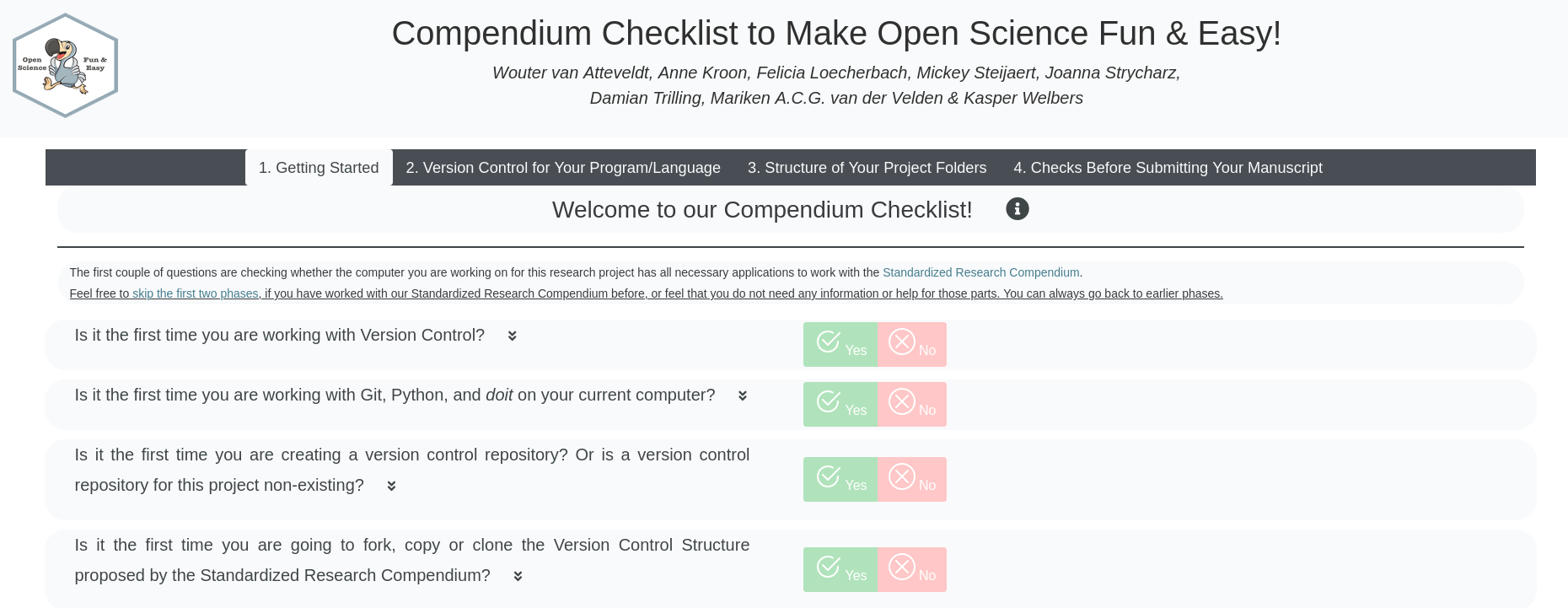

Source: Make Open Science Fun & Easy!

rang 0.2.0 is now on CRAN. David and I also have made the preprint “rang: Reconstructing reproducible R computational environments” on arXiv.

As always, I don’t want to write a blog post about the functionalities of rang because that would be incredibly boring. In my native language, it’s called “A florist praises his flowers for smelling nice.” I just wanted to write boring blog posts, not incredibly boring ones. I wanted to write something else.

The starting point of this blog post is an idea we are toying with in the preprint: making executable research compendia.

The truth is: making executable research compendia was not the original motivation for developing rang, previously named gran. A frame we used in the documentation of rang is that rang is mostly an archaeological tool. Going into the direction of making research compendia in the preprint is semi-accidental. But as shovel, brush, and even carbon dating can also be used for non-archaeological purposes, rang should not be limited by our artificial framing. When software changes are too fast, tracing what we are doing a few months earlier is not so different from archaeology. Given the publication cycle of “at least a year”, what you see in an academic journal — in the modern technological time scale —, are feathered dinosaurs or mammoth; Tutankhamun or Terracotta Army. So, we need archaeological tool to dig through any published work. Also, we should think about our work in archaeological terms: can you dig out the same artefact from the same ruin now, and in the near future?

The idea of (executable) research compendium (I will use research compendium and executable compendium interchangeably in this blog post), interestingly, was originated from the R community. Robert Gentleman and Duncan Temple Lang, two important contributors to R, apparently gave the first definition of the term “compendium” in 2004 1. Of course, I might be wrong and someone might have given a definition of the term earlier. So please do your own homework and I am not your ChatGPT.

A compendium, as Gentleman and Temple Lang define it, is “a container for one or more dynamic documents and the different elements needed when processing them, such as code and data.” Implementations of an executable compendium, have been available more or less the same time, e.g. Ruschhaupt et al., 2004. You might ask, why R? The answer is simple: It has the first widely used literate programming implementation: Sweave. Subsequent implementations based on org-mode by Schulte et al. (2012), —sorry to say this the Emacs community — do not catch on.

XKCD 927

I believe that all supporters of the Open Science movement would agree that executable compendium is a good idea. This is an idea so good that we have a dozen implementations and several (competing) standards. Nüst, a very important figure of Open Science here in Germany, authored a 32-page Executable Research Compendium technical specifications. Talking about actual implementations and only in the R world, there are rrtools, rcompendium, template, manuscriptPackage, and ProjectTemplate. rOpenSci tried to come up with a standard also in 2017.

The concept of executable compendium holds also so dearly in my heart. In my circle of communication researchers, the all-star team in Amsterdam called CCS also created an implementation called CCS Compendium and presented at ICA 2020 Conference. I learned about the concept of compendium from them. I gained access to the implementation earlier than 2020, even though I am not part of the all-star team. I also contributed some minor commits to the implementation. I will come back to the CCS Compendium later.

Interoperability is one of the many guiding principles of rang. When I think and rethink about the idea of preparing executable research compendia with rang in our preprint, an idea naturally comes up: should we make this a feature of rang? To rephrase it a bit: Should we create one more implementation of executable compendium based on rang?

Every time I have this kind of ideas, I think of XKCD 927.

XKCD 927 (CC 2.5 by-nc by Randall Munroe)

I would say my idea of research compendium is better than other ideas out there. I am the florist. My flowers must smell nicer than your flowers. It is extremely easy to create yet another standard. What’s difficult, however, is to follow a standard from someone else. The typical Not-Invented-Here Syndrome.

Chan’s Law of Workflow Automation

“We hope that, as more and more studies use our compendium, it will become even clearer which needs it fulfills well, and where it can still be improved.”

So now, I can go back to the CCS Compendium.

So many standards. So little usage. My article “Four Best Practices” actually has an executable research compendium using the CCS Compendium by my fellow communication researchers in Amsterdam. And I think there are only two published articles out there that are using the CCS Compendium: One is from the megastars of the CCS group themselves and the other from a nobody, i.e. yours truly. Again, I might be wrong and I am not your ChatGPT.

Arguably the more popular format (the number of stars on GitHub, a very unscientific measurement, as of writing is 621 vs 11 of CCS Compendium), rrtools by Marwick et al. (a famous archaeology professor at the University of Washington), has three real-life usage examples which I can find on GitHub and OSF, despite numerous tutorials. Searching rrtools on Dataverse, nothing. Copy and paste the above ChatGPT sentence here.

It basically gives an answer to the above question about whether we (the development team of rang) should introduce yet another format. But do I like the existing implementations of research compendium? Let’s say a bit about the technology used in the CCS Compendium and rrtools.

CCS Compendium uses a Python tool called doit to manage workflow automation. In a script, one needs to write what are the required files and what files get generated. doit will then automatically determine the sequence of batch execution of all the scripts. rrtools cares about the batch execution (i.e. rendering) of the Quarto file. Code and data are organized as a compendium for the rendering. There is no concept of workflow automation. targets and its predecessor drake could be used for that.

All of these remind me of the Zawinski’s Law: “Every program attempts to expand until it can read mail. Those programs which cannot so expand are replaced by ones which can.” I can also come up with the Chan’s Law of Workflow Automation: “Every workflow automation program attempts to expand until it reinvents Make. Those programs which cannot so expand are replaced by ones which can.”

doit, targets, drake, luigi, snakemake, Rake, Ninja, cargo-make… We just don’t want to use Make, although The Turing Way does suggest using Make, the Software Carpentry suggests using Make. Despite the CCS Compendium uses doit, I still needed to use Make in the above mentioned executable research compendium of “Four Best Practices.”

After all of the above discussions, I seem to come up with a Conjecture: Maybe we don’t need a template a.k.a. standard a.k.a. reusable implementation of executable compendium. It is because there is no standard workflow. Organizing workflow around an R package — the approach used by rrtools — might sound unnatural to many. Meanwhile, the intertwining of Python, Make, and whatever, might sound unnatural to many other. And that’s might be the reason why we have so many underused templates a.k.a. standards, a.k.a. reusable implementations. Not just because of Not-Invented-Here Syndrome.

Coda

What we really need, perhaps, is what is described in The Turing Way: A description of a research compendium.

- Files should be organized in a conventional folder structure;

- Data, methods, and output should be clearly separated;

- The computational environment should be specified.

And rang is helpful for the third point.

-

Gentleman, R., & Temple Lang, D. (2007). Statistical analyses and reproducible research. Journal of Computational and Graphical Statistics, 16(1), 1-23. doi The paper was published official in 2007. But earlier draft of the paper was published as earlier as 2004. There is also an earlier conceptualization called “Integrated Documents” by Sawitzki (2002). ↩