chainsawriot

Home | About | ArchiveStructure and Interpretation of Software Testability

I would like to start (yet) another series. My plan is to have 4 articles. More likely than not, I won’t be able to finish all four. I am not saying that I am a master in this business. If you can learn a thing or two, that would be great too. After 19 years of writing this blog, I know that the biggest reader of this blog is my future self. I am writing this for him, my future self. I want him to remember he was once an R developer, like he did once a dancer, actor, tech blogger, pundit, novelist, musician, public health researcher, etc.

The first lesson is on software testing, which I think is the most important one. Later on, I’ll hopefully talk about the development environment, version control and a more “soft” lesson on handling the frustration.

Let’s talk about software testing first.

Empowerment statement

In this post, I am going to explain what makes software testable.

Software testing

I always want to talk about this. Teach this if possible. But many people would consider this as an advanced topic. I can’t teach this as a BA or MA course, so that I can put this in my CV to improve my employability in the academia.

Certainly, most people just want to be a user. Even if you aspire to be a developer, you probably enjoy the creative process of implementing something rather then testing something. In the realm of R package development, this is particularly evidenced. Compared to other programming languages, most developers are not full time software developers. Most of them are political scientists, sociologists, epidemiologists or what ever -ists or -ians. Software out, papers out, and we are done. We got the citations, fame and better employability. We actually don’t need to care about the robustness of our software.

Even with this culture, I admire some developers. For example, I admire the quanteda group. Of course, the RStudio group has great influence too. These two groups have something in common: they know that their software will be used by many users and thus they must assure the robustness of their software.

Using the statistical notation, software testing can be defined as follow: given a particular input x and its expected output y, a software test is to check whether the software can generate an output ŷ from x, where ŷ = y. A very simple example is that we have an input x := 2 + 2; and the expected output y = 4. Now, we execute a software z using the input x and it gives us an output ŷ, that is 5. The software test is to check ŷ = y. In this case, 4 != 5 and thus this software test is failed. Our software z does not work as expected, unless we are living in an authoritarian country and we need to doublethink.

Transforming the last paragraph into R code, that would be:

require(testthat)

x <- "2 + 2"

y <- "4"

hat_y <- z(x)

expect_equal(hat_y, y)

Robustness

Okay. You might argue, your software works. Why do you still need to test it?

First, I am sure that your software works sometimes. But does it work all the time? Let’s relax this a little bit: how about most of the time? Abraham Lincoln said “You can fool all of the people some of the time, and some of the people all of the time. But you cannot fool all of the people all of the time.” You cannot fool all the people to believe your software works all of the time too.

“All of the time” actually includes the future. How can you sure your software is still functioning after another feature is introduced?

Robustness is the quality of a software for being functioning all of the time and in different situations. Robust software is well-tested. In order for software to be well-tested, it first needs to be testable.

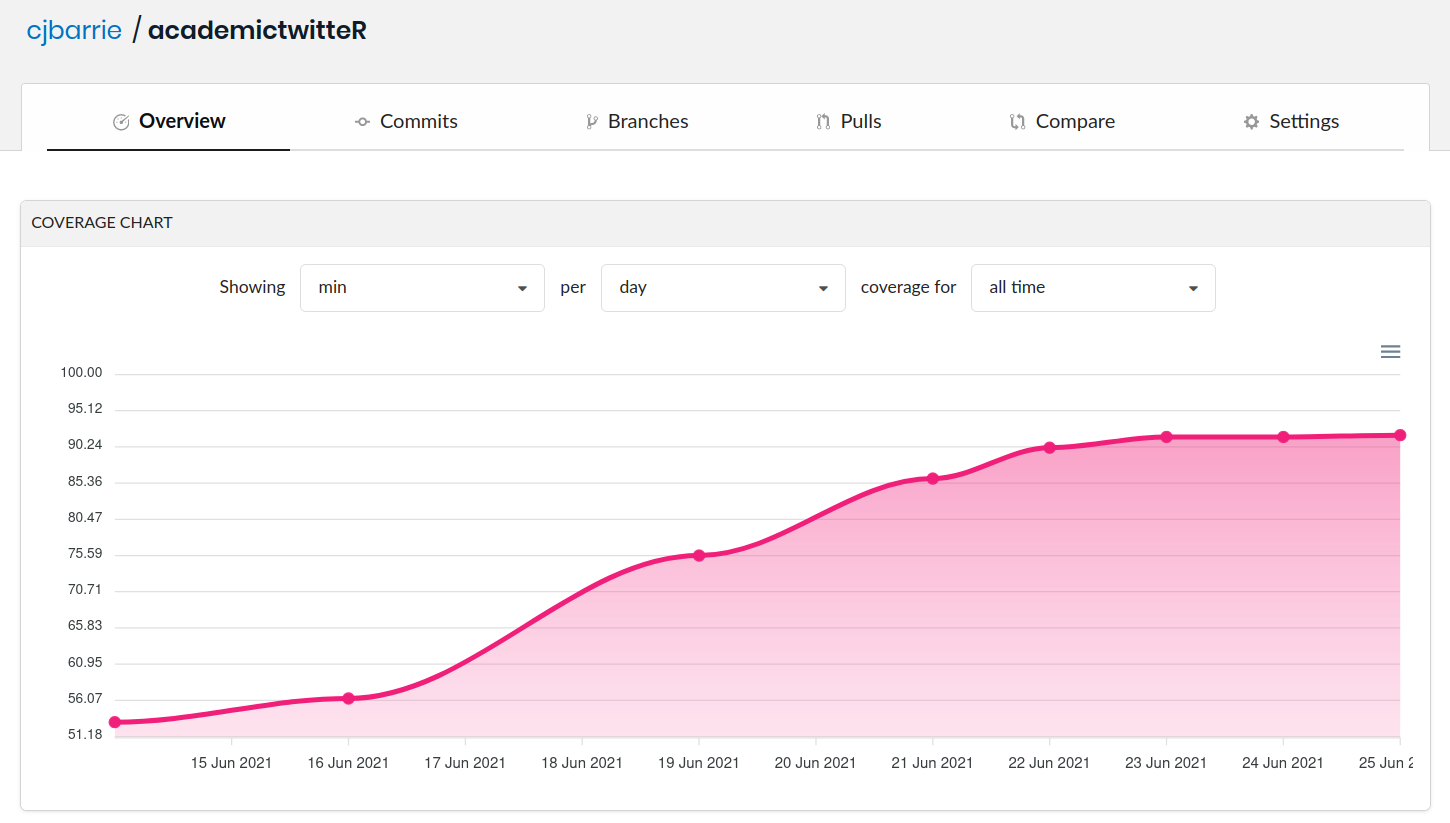

Recently, I have embarked on helping the development of academictwitteR. Of course, I have my own packages such as oolong. These packages have one common “problem”: they have low testability (to begin with). academictwitteR depends on the external Twitter API; oolong is a Shiny app wrapped into an R package. These external dependencies make software difficult to test. A true story is that I implemented a very simple feature in academictwitteR in 2 minutes but I struggled for a whole day to make this feature testable. Through making this feature testable, I knew that the feature was not robust enough.

But what makes these software having low testability? Well, we need to define software testability.

Software testability

Of course, software testability means whether or not a software is testable. But what makes a software testable? Freedman (1991) 1 defines two major aspects of software testability: 1) controllabilty and 2) observability. Modern interpretations have more aspects but controllability and observability are the fundamentals.

Controllability

Controllability is how easy it is for testers to provide distinct input x to the software. It looks deceptively simple. But it is actually very difficult. For example, it is easier for a “simple” R function to have arguments such as x1 or x2 as in mean(x1, x2). You can simple plug in x1 ans x2 and then observe the result. But how about a Shiny app? Shiny app is meant to be a GUI and you cannot give your input x as simple as the “simple” R function above.

For Shiny, you need to use shinytest to make the software controllable. shinytest is a headless browser (i.e. phantom.js) that can be programmatically controlled. Only recently I’ve learned how to use shinytest to make the shiny components in oolong controllable and then they can be automatically tested. In the previous versions of oolong, I didn’t know that it hangs the Shiny app, if I click “confirm” without making a choice. This sort of errors can only be found out and tested by using automatic check like this:

require(shinytest)

dir <- tempdir()

x <- wi(abstracts_stm)

export_oolong(x, dir = dir, verbose = FALSE)

test <- ShinyDriver$new(dir)

test$click("confirm")

expect_error(test$getAllValues(), NA)

This code use shinytest to generate a new ShinyDriver instance, i.e. headless browser, called test and then launch the shiny app in the headless browser. We can then simulate the scenario of without making a choice and clicking “confirm” (which is our input x) and then see whether or not the output ŷ is similar to our expected y. In this case, we expect there is no error using the counter-intuitive expect_error(code, NA) syntax.

Another issue with controllability is that the function is too large and too complex and you cannot control the input x of a particular part of the function. For example, you have a monster big function that retrieves data from the Twitter API based on a query, wrangles the data, and then exports the data. Now, you know that the export part is not functioning as expected: some sort of collected tweets cannot be exported. The input x should be a sort of collected tweets. It is unfortunately untestable as such because the input x of this function is a query. Not some collected tweets.

This problem can be solved by refactoring and modularizing the software. There is no shame to write helper functions. And it is actually easier to test helper functions than a monster big function.

Yet another issue with controllability is ignoring hidden assumptions. For example, a function dealing with time may give different results in a different time zone. More likely than not, the time difference between 17:50 local time and 17:50 GMT is not equal to 0 minute. For example, the time difference on a machine using CEST is 120 minutes. But on another machine using BST, it is 0 minute. In this case, your software, as well as your test, should consider these hidden assumptions such as underlying time zone settings as the input x as well. By doing so, you can then make sure your software is working in computers with different settings in these hidden assumptions.

Last but not least, the biggest, and perhaps the most common, hidden assumption is using readLines to ask for user input. By default, readLines assumes the con of input is from stdin(), i.e. keyboard input by the user. Testing framework such as testthat cannot pass the input x through stdin and thus the test holds to wait for user input through stdin(). Apply the same rule, you should also make con as input of your software and thus the software is controllable. You can then change the con to something else, e.g. a text file, to simulate user input.

Observability

Observability is how easy it is for testers to observe output ŷ from the software. Similar to controllability, it is deceptively simple but it is extremely difficult in many cases.

academictwitteR depends on the Twitter REST API. In order to observe the results, the software needs to make such actual queries. On your machine, you can surely make these queries: You have the access token, the monthly cap of tweet collection, the internet connection. Some API might have IP restriction and your IP is certainly in the white list. The Twitter API should work 100% of the time, right?

What about you need to put this test to run on your friends’ computers? Or maybe you want to run it on a CI service such as Github Action? Simply put, you cannot even observe the output ŷ, when any step involved with making actual queries fails. Be it the lack of access token, rate limit reached, no internet connection, or even Twitter API is dead.

Making queries in this case is just the precondition for many downstream tasks of the software. We can make the output ŷ observable by assuming this precondition is met. One simple workaround is to use a mock API.

httptest allows you to record some “canned” responses from a RESTful API. These canned responses can then be used as a mock API. Using a mock API would always give you the same response even without making any actual queries. In the following code snippet, the commented out region is recording a canned response from Twitter API. And then we can use the mock API to test our actual function make_query.

## require(httptest)

## params <- list(

## "query" = "#standwithhongkong",

## "max_results" = 500,

## "start_time" = "2020-06-20T00:00:00Z",

## "end_time" = "2020-06-21T00:00:00Z",

## "tweet.fields" = "attachments,author_id,context_annotations,conversation_id,created_at,entities,geo,id,in_reply_to_user_id,lang,public_metrics,possibly_sensitive,referenced_tweets,source,text,withheld",

## "user.fields" = "created_at,description,entities,id,location,name,pinned_tweet_id,profile_image_url,protected,public_metrics,url,username,verified,withheld",

## "expansions" = "author_id,entities.mentions.username,geo.place_id,in_reply_to_user_id,referenced_tweets.id,referenced_tweets.id.author_id",

## "place.fields" = "contained_within,country,country_code,full_name,geo,id,name,place_type"

## )

## start_capturing(simplify = FALSE)

## res <- academictwitteR:::make_query(url = "https://api.twitter.com/2/tweets/search/all", params = params)

## stop_capturing()

with_mock_api({

test_that("Make query and errors", {

params <- list(

"query" = "#standwithhongkong",

"max_results" = 500,

"start_time" = "2020-06-20T00:00:00Z",

"end_time" = "2020-06-21T00:00:00Z",

"tweet.fields" = "attachments,author_id,context_annotations,conversation_id,created_at,entities,geo,id,in_reply_to_user_id,lang,public_metrics,possibly_sensitive,referenced_tweets,source,text,withheld",

"user.fields" = "created_at,description,entities,id,location,name,pinned_tweet_id,profile_image_url,protected,public_metrics,url,username,verified,withheld",

"expansions" = "author_id,entities.mentions.username,geo.place_id,in_reply_to_user_id,referenced_tweets.id,referenced_tweets.id.author_id",

"place.fields" = "contained_within,country,country_code,full_name,geo,id,name,place_type"

)

expect_error(academictwitteR:::make_query(url = "https://api.twitter.com/2/tweets/search/all", params = params), NA)

})

})

Similar to the controllability of Shiny apps, the results from Shiny apps are not always observable. Well, in a GUI, our eyes are very keen in observing the changes in the GUI. But these results are not “observable” by an automatic software such as testthat.

Using the same oolong test case above:

require(shinytest)

dir <- tempdir()

x <- wi(abstracts_stm)

export_oolong(x, dir = dir, verbose = FALSE)

test <- ShinyDriver$new(dir)

test$click("confirm")

expect_error(test$getAllValues(), NA)

Our eyes are able to see whether or not the Shiny is freezed. You should see the entire Window goes grey. Unfortunately, testthat cannot observe that. One way to make this observable is to apply the method getAllValues() of the headless browser instance. A freezed Shiny would give an error and then we can test it against our expected y, which is no error. Again, you can only make Shiny applications observable using shinytest.

Also, monster functions usually have low observability because the intermediate observable states are not observable and thus not testable. Again, refactoring and modularizing help.

Contribution

In this blog post, I explain the two aspects of software testability: controllability and observability. Using tricky cases such as testing Shiny apps and software querying a RESTful API, I demonstrate how to make software controllable and observable and thus testable. Tools such as shinytest and httptest can be used to make your difficult-to-test software parts such as Shiny and API requests controllable and observable.

Footnotes

-

Freedman, R. S. (1991). Testability of software components. IEEE transactions on Software Engineering, 17(6), 553-564. ↩