Werden wir Helden für einen Tag

Home | About | ArchiveTop 5 most important textual analysis methods papers of the year 2019

On Twitter, I have written a thread about the top 5 most important textual analysis methods papers of the year 2019.

As I have written, the list is just my personal opinion and is heavily biased towards works by communication researchers. Unexpectedly, that thread is quite popular.

I think it would be a good idea to expand the thread into a blog post so that I can properly answer the most important “why” question: why I think those papers are important? Also, I can add maybe one or two honorable mentions to each of these important papers.

Before that, I should explain what makes a good methods paper, which is useful for communication researchers.

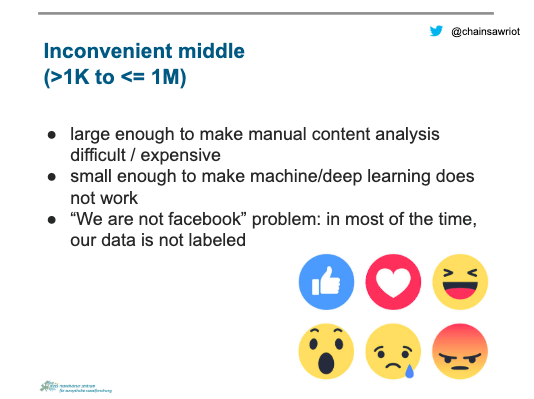

- The method should deal with “inconvenience middle” datasets

I know, I know, there are very effective deep learning algorithms that can solve any communication science problem basically. But we are some poor communication scientists who work with what I called “inconvenient middle” datasets. By “inconvenient middle” datasets, I meant datasets that are large enough to make manual content analysis difficult and expensive but not large and good enough (e.g. data without labels) for doing machine learning. These datasets are usually in the range of K(ilo) (n > 1000 and n < 1000000). Not exactly big data, but not small either. In my opinion, communication scientists should develop methods to deal with this kind of “inconvenience middle” datasets.

- The method should deal with multilingual datasets

Our world is increasingly “glocalized”, not only “globalized”. One tendency of this glocalized world is for us to apply the so-called global methods to local content. Unfortunately or fortunately, we are living in an English-dominant world. By global methods, they usually mean methods that work well with English data. I think a good method should go beyond this English-only mindset and demonstrate its competent in working with multilingual datasets.

- The method should be validated and open-sourced

I think we should move on with the innovation factor of “applying a computational method to a large amount of data”. After all, computational methods are no longer new. Applying a computational method to a large amount of data is still exciting, but we need to do that in a correct way. By correct, it means, as stated in the classic Krippendorff, “replicable and valid methods for making inferences from observed communications to their context”. In order for a method to be replicable, the method must be open-sourced.

Even though I have these three-point criteria, I must admit that not all of the five important papers check all the boxes. Also, I am dealing with text analysis papers only. Therefore, interesting network analysis papers such as this one are not included. OK, enough with my mumble jumbo, here is the list.

- Nicholls, T. (2019). Detecting textual reuse in news stories, at scale. International Journal of Communication, 13. [doi]

The empirical puzzle in this paper is important, which is talking about text reuse in news articles from says, PR or newswire. This paper is interesting because the method used (n-gram shingling or w-shingling) is an incredibly simple but super effective. It highlights the fact that one doesn’t always need to use complicated techniques such as machine learning. “Everything should be made as simple as possible, but not simpler”, said Einstein.

The method has been thoroughly validated by comparing it against manually coded article pairs. Another nice thing about this article is that all Python code is available. Unfortunately, the method was only tested on some US/UK data. I would think the method should work quite work with other Indo-European languages. But with Asian languages such as Chinese or Japanese, 7-gram collision would be very common.

Honorable mention: Nicholls, T., & Bright, J. (2019). Understanding news story chains using information retrieval and network clustering techniques. Communication Methods and Measures, 13(1), 43-59. [doi]

A paper by the same Oxford group tackles a very similar problem. The method is a bit more complicated and not as elegant as the n-gram shingling approach.

- Fogel-Dror, Y., Shenhav, S. R., Sheafer, T., & Van Atteveldt, W. (2019). Role-based Association of Verbs, Actions, and Sentiments with Entities in Political Discourse. Communication Methods and Measures, 13(2), 69-82. [doi]

Sentiment analysis, well…

Sentiment analysis is a method that has been abused by communication scientists. As argue by Puschmann & Powell (2018), it is increasingly “an application without a concept—measurement of something called ‘sentiment’ frequently fails to establish what sentiment might actually mean”.

Dictionary-based methods are notoriously bad. I can write a book about it, but one important problem of dictionary-based methods is they cannot attribute the sentiment back to the actors mentioned in a piece of text. In the sentence “Donald Trump trumps Donald Trump Jr. in terms of grumpiness”, a regular dictionary-based method can only give an overall score of says “3” to denote the sentiment of the sentence. We don’t know how this sentiment is distributed among the two actors (Donald Trump and Donald Trump Jr.) in the sentence.

The method by Fogel-Dror et al solves this problem by attributing the sentiment of verbs back to the actors in a sentence using a very simple rule-based technique. This method can also cater to the influence of common reporting verbs (e.g. say) by using a little bit of machine learning. The advantage of this technique is that one doesn’t need to have very high-quality “labeled” data because the method is utilizing the grammatical information extracted by common grammatical parsers, e.g. Spacy or CoreNLP. The sentiment of verbs is determined by the very common but trusty Lexicoder Sentiment Dictionary.

The method has been validated with a relatively small dataset of English news coverahe of the Isreali-Palestinian conflict. At the current stage, it can only attribute sentiment from verbs back to an actor. But this method demonstrated how rule-based methods are useful for dealing with “inconvenient middle” datasets. Unfortunately, the code of this method is not open. To make it even worse, this method is patented.

Honorable mention: Boukes, M., van de Velde, B., Araujo, T., & Vliegenthart, R. (2019). What’s the Tone? Easy Doesn’t Do It: Analyzing Performance and Agreement Between Off-the-Shelf Sentiment Analysis Tools. Communication Methods and Measures, 1-22. [doi]

This paper from the Amsterdam group confirms that off-the-shelf sentiment tools such as LIWC or SentiStrength should not be applied without validation because these tools can barely predict human-coded news sentiment. Valuable insight indeed, a message that worths reinforcing.

- Tolochko, P., & Boomgaarden, H. G. (2019). Determining Political Text Complexity: Conceptualizations, Measurements, and Application. International Journal of Communication, 13, 21. [doi]

I love this paper. Seriously.

Once again, a good method should be simple, not simpler. This paper starts with the theoretical concept of text complexity and its dimensions, namely, semantic complexity and syntactic complexity. And then determines a simple composite method to measure these two dimensions. The method can deal with “inconvenient middle” datasets pretty well because the heavy lifting part of it is dealt with by Spacy. Also, the method is language independent. The authors have demonstrated the applicability of the method with both English and German data. A subsequent paper by the same group demonstrates in an experiment on how the text complexity of political information can impact the political knowledge of readers by using this two-dimensional measurement of text complexity.

Unfortunately, the code of the method is not open. Did I say I love this paper? Yours truly has reimplemented an R version.

Honorable mention: Benoit, K., Munger, K., & Spirling, A. (2019). Measuring and explaining political sophistication through textual complexity. American Journal of Political Science, 63(2), 491-508. [doi]

This paper came out almost exactly at the same time as the Tolochko & Boomgaarden. The R package of the method has long been published on Kenneth Benoit’s GitHub. All material of the paper is open. But one drawback of this method is the reliance of word rarity information from Google Books data set. Because of that, the method is language-dependent.

- Guo, L., Mays, K., Lai, S., Jalal, M., Ishwar, P., & Betke, M. (2019). Accurate, Fast, But Not Always Cheap: Evaluating “Crowdcoding” as an Alternative Approach to Analyze Social Media Data. Journalism & Mass Communication Quarterly, 1077699019891437. [doi]

Crowdcoding, a wild west.

I have a personal grudge with this method but I don’t want my prejudice and my trauma tainted my decision. Well, crowdcoding is still a good method. The 2017 Article of the Year Award winner of Communication Methods and Measures is a paper about crowdcoding. (I will come back to this later)

Manual coding is expensive. It is expensive so one cannot do manual coding with “inconvenient middle” datasets. I have joked with one of my collaborators that why don’t we hire two professors to code our data full-time? Admit it or not, the reason for us to do crowdcoding, or crowdsourcing in general, is only because it is a cost-cutting measure. Previous papers usually assume crowdcoding is cheaper than manual coding and thus if one can demonstrate the results from crowdcoding are good enough compare to manual coding then crowdcoding is a cheap but viable alternative.

Back in the days when I was an epidemiologist, I did cosplay a health economist a lot by evaluating two medical interventions in cost-effectiveness terms. I think if we consider crowdcoding as a cost-cutting measure then we must evaluate two methods not only on the methodological equivalence but also do a proper economical analysis.

The paper by Guo et al. is almost there. I like this paper for putting the cost into consideration and then the authors give us a counter-intuitive conclusion: crowdcoding is not always cheaper than manual coding.

I think a slight improvement on this paper would be quantifying the marginal cost of one unit increase in either speed or quality of the coding by the two methods. Just like the health economists do.

Did I mention Figure Eight is no longer a viable option for researchers? The marginal cost of using Figure Eight would be astronomical now.

Honorable mention: Lee, S. J., Liu, J., Gibson, L. A., & Hornik, R. C. (2019). Rating the Valence of Media Content about Electronic Cigarettes Using Crowdsourcing: Testing Rater Instructions and Estimating the Optimal Number of Raters. Health Communication, 1-11. [doi]

Well, essentially on the same topic. And using the bootstrapping method, Lee et al show that the optimal number of crowdcoders should be 9. But could you afford 9 coders? In addition, mind you, for some languages (e.g. languages start with the letter G or A), the total number of coders on a crowdcoding platform might be less than 9.

Last but not least,

- Lind, F., Eberl, J. M., Heidenreich, T., & Boomgaarden, H. G. (2019). When the Journey Is as Important as the Goal: A Roadmap to Multilingual Dictionary Construction. International Journal of Communication, 13, 4000-4020. [doi]

I know I know, this is another paper from the Wien group. And essentially the same research group to the paper I said I love. But this is, in my opinion, the best methods paper dealing with multilingual data of the year. So, I need to select two papers from the same group. Did I mention the 2017 Article of the Year Award winner of Communication Methods and Measures? By the same first author.

This paper is not about the ends. As framed by the authors, it is about the journey. The area of study is severely under-researched, which is about automated content analysis of multilingual text data. They tried different methods of creating dictionaries for 7 languages, e.g. keyword preselection, keyword translation, keyword evaluation, etc. The “gold standard” is the path-of-the-least-resistant approach: translate the multilingual corpora into English using Google Translate and use the English dictionary. Honestly speaking, the multi-lingual dictionaries created do not have good performance. The best one is with an F1 score of 0.78. But I think the value of this paper is the error analysis: which methodological decisions can improve the performance of the multilingual dictionaries? For example, keyword evaluation by both the researcher and a native speaker can significantly improve the performance.

At least, this paper is not suggesting us to use Google Translate blindly for comparative communication research. And that brings us to the last honorable mention…

Honorable mention: Reber, U. (2019). Overcoming Language Barriers: Assessing the Potential of Machine Translation and Topic Modeling for the Comparative Analysis of Multilingual Text Corpora. Communication Methods and Measures, 13(2), 102-125. [doi]

I have a lot of doubts about this paper. This paper by a researcher from Bern compares topic model solutions from a multilingual text corpus using two different machine translation services (Google Translate and DeepL) and approaches (full-text translation and term-by-term translation. Term-by-term translation is essentially translating document-term matrices). My doubts with this paper are mostly about the topical differences he found between the German and English text data. Could we 100% sure the topical differences are really substantial differences or artifacts of machine translation? I don’t think the author has adequately validated his findings. I still don’t believe this paper can legitimize the usage of machine translation and topic modeling for comparative analysis of multilingual text corpora, neither the Lucas et al (2017) nor De Vries et al (2018). Having said so, this paper is interesting because it provides a yardstick for evaluating methods dealing with multilingual data, namely, robustness and integrability.

Okay, with this blog post, probably I am no longer a nice person who praises other researchers only. I have also voiced out my discontent with some of the papers listed. In 2020, I would like to see more methodological papers with shared code and data. Also, more methods of dealing with multilingual datasets.